Early 2022 Budget GPUs Lineup: Intel ARC A380, Nvidia RTX 3050, AMD RX 6500 XT

Entry-level GPU is dead. At least, that was how we perceived the empty void below our current “mid-range” lineup: the RX 6600 XT, RX 6600 and the RTX 3060. Either you have to pay exorbitant prices to get respectable frame rates, or keep using outdated architecture to eke out a meager experience of very low-end gaming amidst the current GPU crisis.

Starting January 2022, though, budget-conscious enthusiasts may have a reason to get reasonably excited again. This is because Nvidia, AMD, and yes, even Intel, are finally planning to fill up the two-year-long-empty tier of budget entry-level graphics cards.

What “Budget Graphics Cards” That Are/Were Available in 2021?

Without referring to any items on the used market, there are only four discrete graphics cards that are commercially available (brand new) below the RTX 3060/RX 6600 paper MSRP price mark. These are:

- Nvidia Geforce GTX 1050 Ti – reliable but starting to become very outdated for Triple-A gaming. Playing at 1440p is possible, but only for very graphically simple games, or those that came out more than five years ago (when it was first released).

- Nvidia Geforce GTX 1650 – ridiculed and lampooned before, during a healthier time in the PC market. Today it serves as the “nothing-else-but-this” option for very basic, entry-level Triple-A gaming.

- Nvidia Geforce GTX 1660 Ti – supposed to be the upper entry-level option, for playing at 1080p with high to ultra settings, and 1440p at lower frame rates (basically a GTX 1070). Disgustingly overpriced in 2021.

- Nvidia Geforce GTX 1660 Super – occupies the same performance tier as the 1660 Ti, but is a tiny bit more power-efficient, and is supposed to be cheaper. Also very disgustingly overpriced in 2021.

As for why no AMD cards are on the list, the entire RDNA1 lineup has been completely missing since late 2020, with the higher-end models such as the RX 5700 XT selling for astronomical prices on the used market due to their superbly excellent cryptomining capability.

So here we look at three of the budget GPUs coming to your online store or computer shop near you.

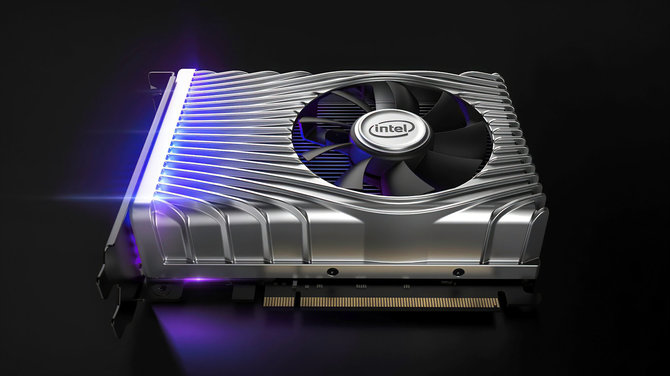

(Possibly) Best Budget Contender: Intel ARC A380

| Process node | 6nm |

| GPU name | Xe-HPG DG2 |

| GPU Cores | 1024 (128 EU) |

| Max Boost Clock | 2450 Mhz |

| VRAM | 6 GB GDDR6 |

| Memory Bus | 96-bit |

| Bandwidth | 14~16 Gbps |

| TFLOPS (FP32) | Almost 5 |

| TDP | 75W |

| PCI power | none |

| Advertised MSRP | At least $200 |

Intel’s upcoming GPUs have received very mixed reactions from the PC enthusiasts, with opinions ranging from cautiously positive, to downright disastrous. The release of the GT 1030-esque DG1 did not exactly impress people, and the architecture doesn’t really represent what the Alchemist series would really be.

With more and more information leaked, however, it’s becoming clear just how serious Intel is at making a huge impact upon its arrival in the GPU market. Starting with the revealed product segmentation, which turned to be very diverse, ranging from products as high as an RTX 3070 or RX 6700 XT (ARC A780*), to the lowest 4GB VRAM laptop variants (ARC A330*).

(*tentative names)

The ARC A380 sits at the current lowest end of the desktop Alchemist lineup (third from bottom if including laptop GPUs), intended as the “widely available workhorse” GPU. Remember how the GTX 750 Ti and 1050 Ti filled the entry-level market before? That would more or less be how Intel wants the ARC A380 to be seen. While no one knows exactly how much they will cost, it is reasonable to assume (based on the current market), that the lowest retail price would be $200.

And its performance? According to at least one info leak, possibly very close to a 1650 Super. This time at least, the 6GB GDDR6 VRAM would suffice with many modern titles at reasonably higher (texture) settings, preventing potential stutters in VRAM hungry modern triple-A games such as Assassin’s Creed: Valhalla.

Oh and to be clear, no, the upcoming ARC A380 wouldn’t end the GPU crisis with Intel’s own fabs. This is because Team Blue’s entire GPU lineup is slated to be manufactured using TSMC’s 6nm process node. So yeah, Intel is basically falling in line with the same pre-ordered silicon as Nvidia and AMD.

Estimated Performance of the ARC A380: Near a GTX 1650 Super, maybe even a GTX 1660

All the Rejected 3060s: Nvidia Geforce RTX 3050 (desktop)

| Process node | 8nm |

| GPU name | GA106-140-A1 |

| GPU Cores | 2304 (18 SM) |

| Max Boost Clock | 1740 Mhz |

| VRAM | 4GB GDDR6 |

| Memory Bus | 128-bit |

| Bandwidth | 14 Gbps |

| TFLOPS (FP32) | 8 |

| TDP | 90W |

| PCI power | 1x 6-pin |

| Advertised MSRP | Below $300 |

At this point, we all know that Nvidia has absolutely no plans to support gamers, and is out to maximize its profits so long as they can keep extending the “crisis”. The latest evidence of which is the dubious release of the RTX 2060 12GB (spoiler: not marketed as a gaming card at all), and the recent announcement of the baffling tier of the RTX 2050 laptop GPUs.

Which is why it is surprising that there are recent leaks of the RTX 3050 at all, since all that Team Green ever did was to mark up prices higher and higher by artificially introducing better binned dies of already known SKUs: the RTX 3070 Ti, RTX 3080 Ti, and next year, the completely unnecessary RTX 3090 Ti.

But if the revealed information so far is to be analyzed, the RTX 3050 is turning out to be the least promising of the three GPUs, despite technically being the most powerful one. First, the TDP. According to the latest leaks, it would have a 90-watt TDP, which is quite odd, since this is less than half the power that the GA106 die is usually pumped with for its RTX 3060 SKUs. Add the “traditional entry-level” 128-bit memory bus bandwidth, and we are looking somewhere far below the RTX 2060 line (should be equivalent to one tier higher than the previous).

Second, is the split between 4GB and 8GB models. Remember, the RTX 3060 at paper MSRP is already $329. And so the 8GB model (which presumably would use the currently-expensive GDDR6 version) would have to exist between the 4GB version and the RTX 3060. If the GTX 1660-level of performance turns out to be correct, we are looking at a GPU that did not improve at all architecture-wise, and yet is going to be introduced at around $30-$50 more?

But hey, on the bright side, at least consumers will have “entry-level” access to DLSS right?

Estimated Performance of the RTX 3050 (desktop): Possibly a bit more than the GTX 1660

Sad, Sad Memory Bus: AMD RX 6500 XT

| Process node | 6nm |

| GPU name | Navi 24 |

| GPU Cores | 1024 (16 CU) |

| Max Boost Clock | 2500 Mhz |

| VRAM | 4GB GDDR6 |

| Memory Bus | 64-bit |

| Bandwidth | 16 Gbps |

| TFLOPS (FP32) | 5 |

| TDP | 107W |

| PCI power | 1x 6-pin |

| Advertised MSRP | Below $300 |

One interesting thing about the launch of the RX 6600 XT and RX 6600, is that they actually were sold worldwide at very near MSRP prices during the first wave of their release. Of course, it did not help that AMD already stamped their official prices in reaction to the current GPU market, but at the very least, the earliest RX 6600 XT offered GTX 1080 Ti-level performance at a price quite lower than an RTX 3060.

As such, one would initially assume that AMD’s entry-level contender will follow a similar retail pricing format. However, with the RTX 3050 looming on the horizon, AMD cannot possibly go far beyond $250 for the RX 6500 XT. In fact, Team Red has quite the incentive to redo some of its Polaris architecture marketing strategies, and aggressively drive the RX 6500 XT’s paper MSRP further downward.

On the specification side, it should ideally provide a decent improvement to the RX 480 version 3.0 RX 5500 XT. 16 RDNA2 compute units baked with 1024 shading cores should do the trick nicely. However, its glaringly low 64-bit memory bus seems to be the immediate bottleneck. It may not have a detrimental effect on (medium) graphical settings. But it would inevitably lock the effective resolution to 1080p, with no graphical setting compromises (or even the efficient use of features like FSR) on 1440p.

And that’s about it for now really. Unlike Intel and Nvidia, AMD doesn’t exactly have any special technologies that it can offer as a “sales pitch”. The RX 6500 XT may have a few obligatory raytracing cores installed, but we all knew how the GTX 1660 Ti and 1660 Super handled those kinds of features didn’t we?

Oh, and as a final note, the RX 6400 also exists. But it’s not going to be available for retail, and won’t remotely be in the same tier as any of our three main entry-level contenders.

Estimated Performance of the RX 6500 XT: Most likely approaching R9 FURY X levels